July 2024

In the last month, we released a major new feature to enable manual review and correction to your document extraction process. We also released support for an Anthropic large language model (LLM) and support for a cutting-edge LLM approach to locating the answer to your prompts in the document.

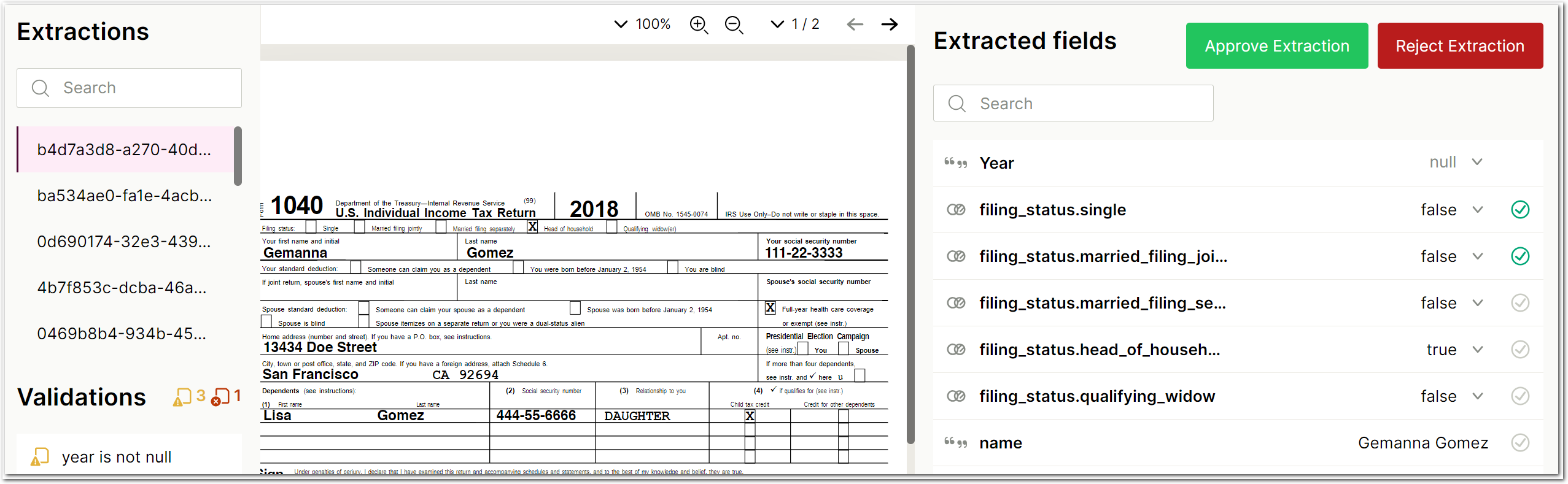

New feature: Human review

If extractions contain errors, for example as the result of hard-to-read handwriting, you can now flag extractions for manual correction by a human reviewer. Flag extractions automatically at scale in production by configuring rules based on validations and extraction coverage. Manually correct the extracted fields in the Sensible app's Human review tab, then approve or reject the extraction. For more information, see Reviewing extractions and our announcement blog post.

New feature: LLM page summaries for locating target data

With the Query Group method's new Search By Summarization parameter, you can troubleshoot situations in which Sensible misidentifies the part of the document that contains the answers to your prompts. With this parameter, Sensible implements a new completion-only retrieval-augmented generation (RAG) strategy. Sensible prompts an LLM to summarize each page in the document, prompts a second LLM to return the pages most relevant to your prompt based on the summaries, and extracts the answers to your prompts from those pages.

New feature: Support for Anthropic LLMs

With the Query Group method's new LLM Engine parameter, you can specify to use Anthropic LLMs instead of OpenAI LLMs where applicable.

Improvement: Stop extraction on row for Text Table and Fixed Table methods

With the new Stop On Row parameter for the Fixed table and Text table methods, you can specify to extract a portion of a table, up to the specified row.