Advanced LLM prompt configuration

To extract data from a document using LLM-based methods, Sensible submits a part of the document to the LLM. Submitting a part of the document instead of the whole document improves performance and accuracy. This document excerpt is called a prompt's context.

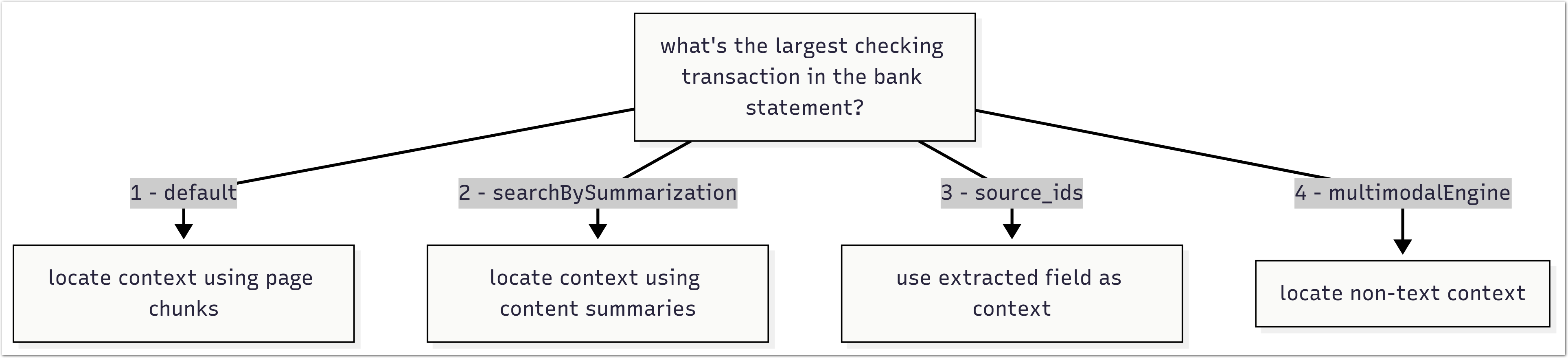

To troubleshoot LLM-based methods, you can configure how Sensible locates a prompt's context using one of the following approaches:

-

(Default) Locate context with embeddings scores

-

Locate context by summarizing document content

-

Locate context by "chaining" or "pipelining" prompts

-

Locate non-text images as context

For information about configuring each of these approaches, see the following sections.

(Default) Locate context with embeddings scores

By default, Sensible locates context by splitting the document into equally sized chunks, scoring them for relevancy using embeddings, and then returning the top-scoring chunks as context:

The advantage of this approach is that it's fast. The disadvantage is that it can be brittle.

The following steps outline this default approach and provide configuration details:

- Sensible splits the document into chunks. Parameters that configure this step include:

- Chunk Count parameter.

- Page Range parameter

- Note: Defaults for these parameters vary by LLM-based method. For example, the default for the Chunk Count parameter is 5 for the Query Group method and 20 for the List method. Each method has a default chunk size, up to one page.

- Sensible selects the most relevant chunks and combines them with page-number metadata to create a "context". Parameters that configure this step include:

- LLM Engine parameter

- Sensible creates a full prompt for the LLM that includes the context and the descriptive prompts you configure in the method. Sensible sends the full prompt to the LLM.

- Sensible returns the LLM's response.

The details for this general process vary for each LLM-based method. For more information, see the Notes section for each method's SenseML reference topic, for example, List method.

(Recommended) Locate context by summarizing document

When you configure the Search By Summarization parameter for supported LLM-based methods, Sensible finds context using LLM-generated summaries. Sensible uses a completion-only retrieval-augmented generation (RAG) strategy:

-

Sensible prompts an LLM to summarize chunks of the document. If you set

page, each page is a chunk. If you setoutline, an LLM generates a table of contents of the document, and each segment of the outline is a chunk. -

Sensible prompts a second LLM to identify the most relevant chunks based on the summaries, then uses their text as the context.

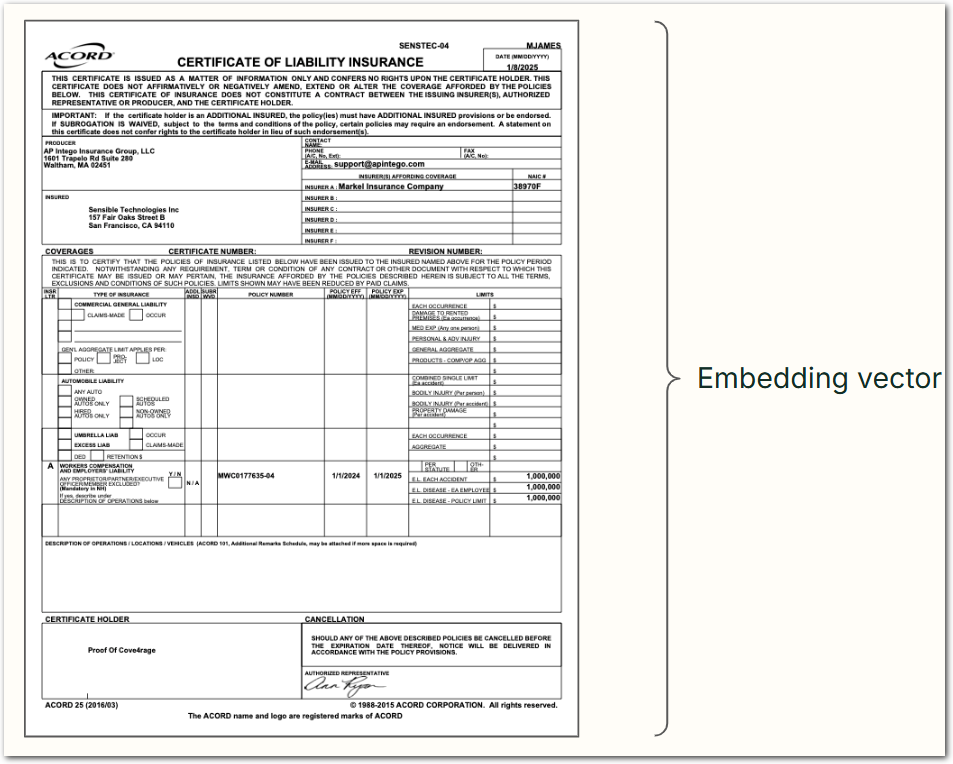

The following image shows how an LLM can outline and summarize a document:

This strategy often outperforms the default approach to locating context. It's useful for long documents in which multiple mentions of the same concept make finding relevant context difficult, for example, long legal documents.

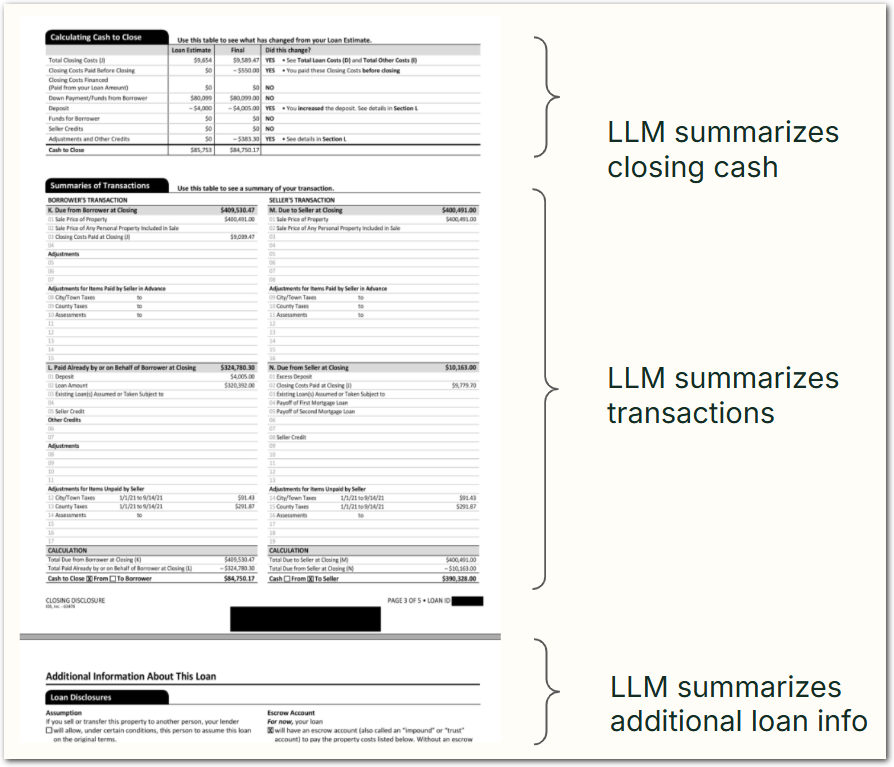

Locate context by pipelining prompts

You can prompt an LLM to answer questions about other fields' extracted data. Specify the extracted data using the Source IDs parameter for supported LLM-based methods. In this case, the context is predetermined: it's the output from the other fields. By pipelining prompts, you can configure agentic workflows to extract document data.

For example, you use the layout-based Text Table method to extract the following data into a snacks_rank field:

snack annual regional sales

apples $100k

corn chips $200k

bananas $150kIf you create a Query Group method with the prompt what is the best-selling snack?, and specify snacks_rank as the context using the Source IDs parameter, then Sensible searches for answers to your question (corn chips) only in the extracted snacks_rank table rather than in the entire document:

Use other fields as context to:

- Narrow down the context for your prompts to a specific part of the document.

- Reformat or otherwise transform the outputs of other fields. For example, you can use this as an alternative to types such as the Compose type with prompts such as

if the context includes a date, return it in mm/dd/yyy format. - Compute or generate new data from the output of other fields. For example, prompt an LLM to sum the output of the wages fields extracted from a tax form.

- Troubleshoot or simplify complex prompts that aren't performing reliably. Break the prompt into several simpler parts, and chain them together using successive Source ID parameters in the fields array.

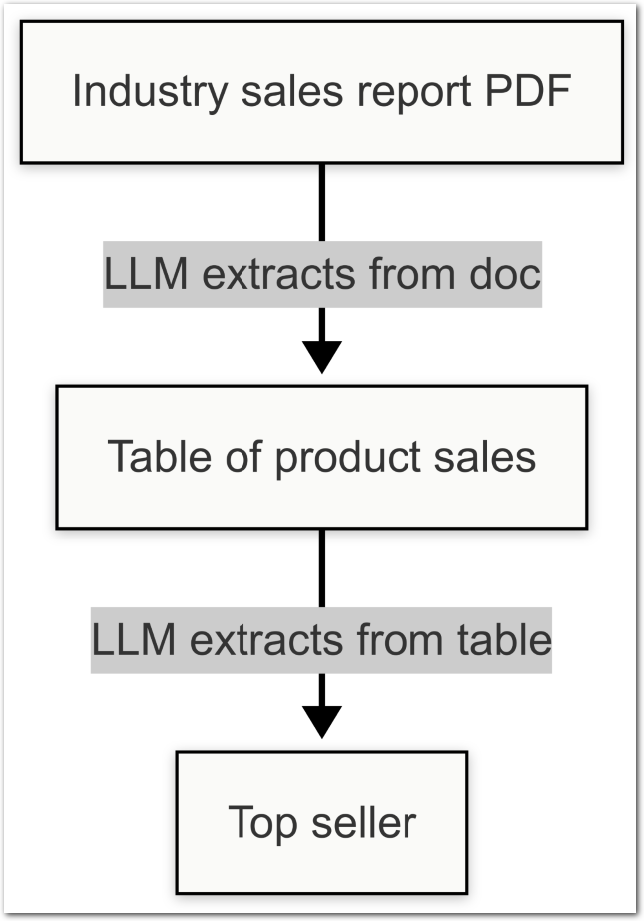

Locate multimodal (non-text) data

When you configure the Multimodal Engine parameter for the Query Group method, you can extract from non-text data, such as photographs, charts, or illustrations.

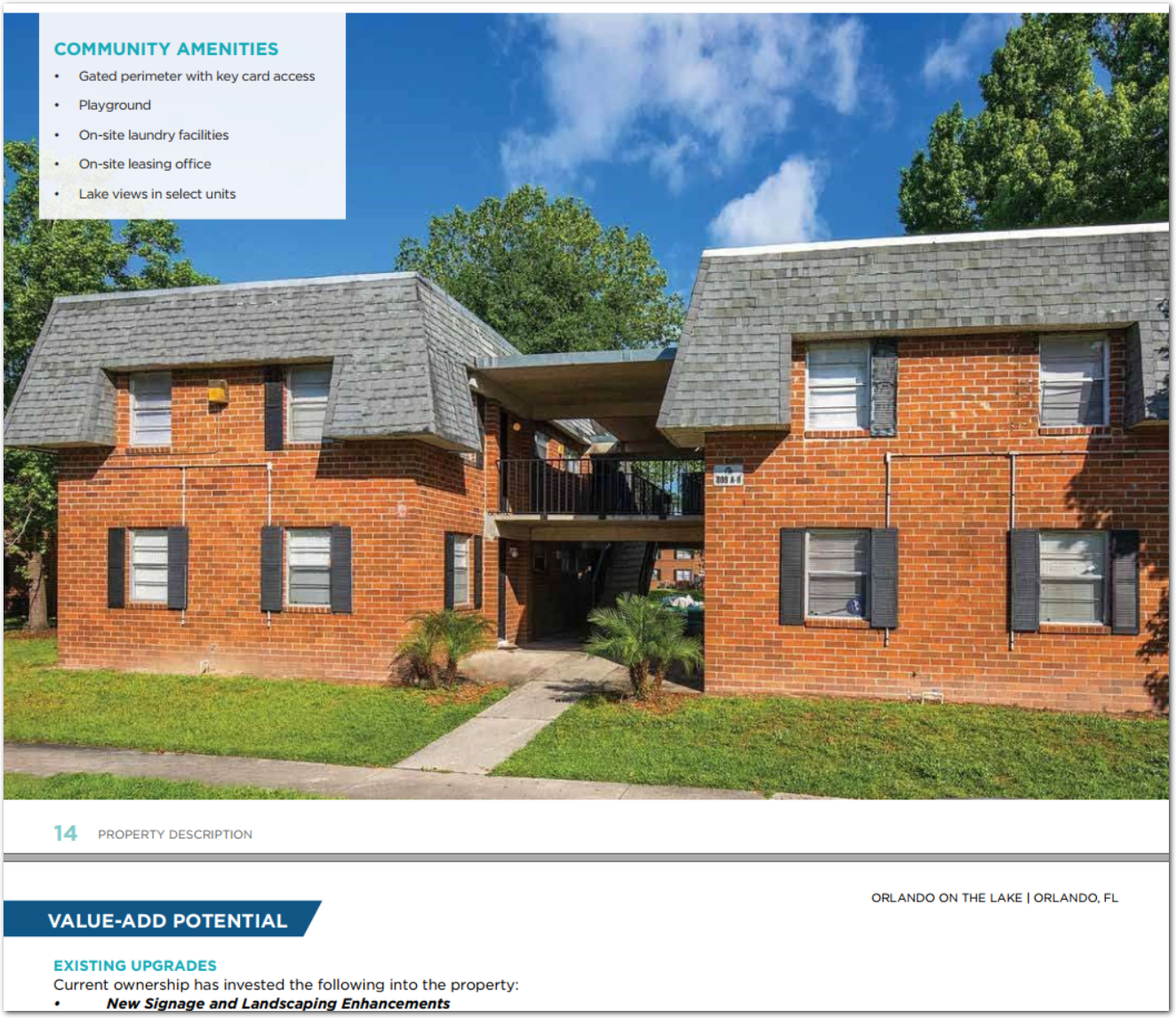

For example, for the following image, you can prompt, "are the buildings multistory? return true or false".

When you extract multimodal data, Sensible sends an image of the relevant document region as context to the LLM. Using the Region parameter, you can configure to locate the context using a manually specified anchor and region coordinates, or use the default page chunk scoring approach.

Troubleshooting

See the following tips for troubleshooting situations in which large language model (LLM)-based extraction methods return inaccurate responses, nulls, or errors.

Interpret confidence signals

Confidence signals are an alternative to confidence scores and to error messages. For information about troubleshooting LLM confidence signals, such as multiple_possible_answers or answer_maybe_be_incomplete, see Qualifying LLM accuracy.

Create fallbacks for null responses or false positives

Sometimes an LLM prompt works for the majority of documents in a document type, but returns null or an inaccurate response (a "false positive") for a minority of documents. Rather than rewrite the prompt, which can cause regressions, create fallbacks targeted at the failing documents. For more information, see Fallback strategies.

Trace source context

Tracing the document's source text, or context, for an LLM's answer can help you determine if the LLM is misinterpreting the correct text, or targeting the wrong text.

You can view the source text for an LLM's answer highlighted in the document:

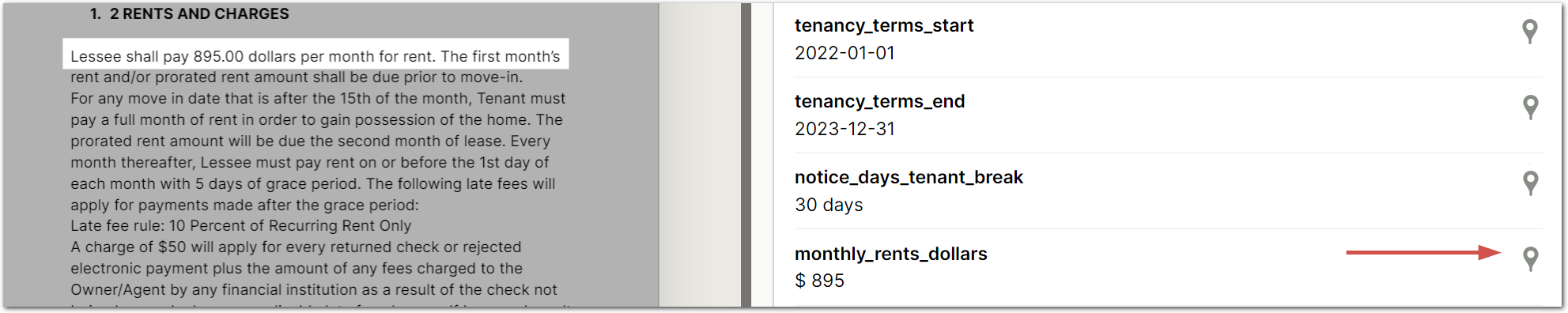

- In the visual output pane, click the Location icon next to a field to view its source text in the document. For information about how location highlighting works and its limitations, see Location highlighting.

Updated 2 months ago